Modern application development means web development most of the time. Generally, a web stack consists of

- a backend, i.e. code that contains business logic and connects to a database. The backend delivers content to the frontend

- a frontend, i.e. code that runs in the browser (HTML, CSS, JavaScript) and connects to the backend

- a persistent data store, i.e. a database or an object storage that stores application data between sessions

Back in the day, this could be as simple as a LAMP stack: You get your shared web hosting package, upload a bunch of PHP files that dynamically generate HTML and connect to a MySQL database on the same host. While these setups are still a viable option in some cases and in fact, probably run the majority of websites out there, modern web applications need to account for specialised teams working on each part of the software autonomously, scalability and reliability.

How would that look like in practice? In this article, I will guide you through a cloud based “Hello World” application: We will create an API that greets a user based on their name, and stores that name in a database. At the same time, we will create a React app for the frontend that connects to that API.

We will build all of this with serverless services provided by AWS. Here are the services that we will use in particular:

AWS Lambda

AWS Lambda is a serverless runtime that enables the execution of code without taking care of the server management.

We will use Python to write our first lambda function.

AWS API Gateway

The API Gateway connects our Lambda function with the outside world. In our example, we use HTTP for this connection, but other means of access are possible, too, such as WebSockets.

AWS Amplify

AWS Amplify is a build and hosting environment for static websites. We can provide code, such as a rect application that gets built on AWS Amplify and hosted right there. Later, we will be able to access our app in the browser.

Amazon Dynamo DB

Amazon DynamoDB is a serverless database with some interesting features. We will use it to store each requested name.

So, let’s dive just into it.

The Code

API

import json

from time import gmtime, strftime

import boto3

dynamodb = boto3.resource("dynamodb")

table = dynamodb.Table("persistence-example")

now = strftime("%a, %d %b %Y %H:%M:%S +0000", gmtime())

def lambda_handler(event, context):

name = (event.get("queryStringParameters", {}) or {}).get("name", "Bas")

table.put_item(Item={"ID": str(name), "LatestGreetingTime": now})

return {"statusCode": 200, "body": f"Hello, {name}!"}Frontend

For the frontend, we use create-react-app. Our app should just include an input field for the name and a submit button.

The submit button should send the value of the input field to our API Gateway, so that it reaches our lambda function. We do not know the endpoint of the API, yet. AWS will assign a random hostname to it, and we have not taken care of assigning a predefined hostname. So, the react app needs to know about this endpoint. We will set this endpoint through an environment variable. We will later use terraform to fill that variable.

Here is the code for our App.js:

import logo from './logo.svg';

import './App.css';

function App() {

const onSubmit = () => {

console.log(process.env.REACT_APP_API_ENDPOINT)

const url = process.env.REACT_APP_API_ENDPOINT + "/?name=API"

fetch(url).then(response => (response.text()).then(data => { alert(data) }));

}

return (

<div className="App">

<header className="App-header">

<img src={logo} className="App-logo" alt="logo" />

<p><label for="name"><input type="text" id="name" /></label></p>

<p><label for="submit"><button id="submit" onClick={onSubmit}>OK</button></label></p>

</header>

</div>

);

}

export default App;The Infrastructure

We will use HashiCorp’s TerraForm to deploy all the services we need to AWS. In complex environments, nobody is going to click through the AWS console to set up all the different components. Rather, the definition of the required infrastructure is part of the source code. Hence, it is version controlled, can automatically be tested and deployed, and developers are in full charge of its behaviour. This is exactly what is described by the term “DevOps”: Developers managing Operations. You might have also heard the term “Infrastructure as Code” which describes the idea that the infrastructure configuration is laid out in a machine-readable format.

And this is exactly what TerraForm tries to achieve here: We no longer need the AWS console, we just configure our infrastructure requirements in a text file called main.tf.

Configuring the provider

Terraform works with .tf configuration files. Since it works with different cloud providers, we need to setup the provider for AWS in our main.tf first:

provider "aws" {

region = "us-west-2"

access_key = "<YOUR ACCESS KEY>"

secret_key = "<YOUR ACCESS KEY SECRET>"

}Making a source code archive

Our lambda function code resides in the same repository, in the lambda sub-directory. We use the data type of Terraform here that allows us to create a zip file from the Python sources in that directory. We will use that zip file to deploy our lambda function to AWS in the next section.

data "archive_file" "lambda-zip" {

type = "zip"

source_dir = "lambda"

output_path = "lambda.zip"

}Create the Lambda Function

For our lambda function we need two resources. A resource represents “something” deployed to AWS.

In our case, we need two things for the lambda function:

- The lambda function itself

- An IAM role for the lambda function.

resource "aws_iam_role" "lambda-iam" {

name = "lambda-iam"

assume_role_policy = <<EOF

{

"Version": "2012-10-17",

"Statement": [

{

"Action": "sts:AssumeRole",

"Principal": {

"Service": "lambda.amazonaws.com"

},

"Effect": "Allow",

"Sid": ""

}

]

}

EOF

}

resource "aws_lambda_function" "lambda" {

filename = "lambda.zip"

function_name = "lambda-function"

role = aws_iam_role.lambda-iam.arn

handler = "lambda.lambda_handler"

source_code_hash = data.archive_file.lambda-zip.output_base64sha256

runtime = "python3.8"

}Note that the role attribute in our lambda function references the lambda-iam role. This is a variable that gets replaced by Terraform. We solve the chicken-and-egg-problem here: Since the IAM role is not yet created on the first run, we do not know which ARN it is going to have. So we do not know which ARN to put into the role attribute of our aws_lambda_function definition. Terraform will take care of the replacement for us.

The other attribute that gets a variable replacement is source_code_hash. Terraform will compute the sha256 hash of our lambda.zip file for us to ensure source code integrity.

Connect to API Gateway

resource "aws_apigatewayv2_api" "lambda-api" {

name = "v2-http-api"

protocol_type = "HTTP"

}

resource "aws_apigatewayv2_stage" "lambda-stage" {

api_id = aws_apigatewayv2_api.lambda-api.id

name = "$default"

auto_deploy = true

}

resource "aws_apigatewayv2_integration" "lambda-integration" {

api_id = aws_apigatewayv2_api.lambda-api.id

integration_type = "AWS_PROXY"

integration_method = "POST"

integration_uri = aws_lambda_function.lambda.invoke_arn

passthrough_behavior = "WHEN_NO_MATCH"

}

resource "aws_apigatewayv2_route" "lambda-route" {

api_id = aws_apigatewayv2_api.lambda-api.id

route_key = "GET /{proxy+}"

target = "integrations/${aws_apigatewayv2_integration.lambda-integration.id}"

}

resource "aws_lambda_permission" "api-gw" {

statement_id = "AllowExecutionFromAPIGateway"

action = "lambda:InvokeFunction"

function_name = aws_lambda_function.lambda.arn

principal = "apigateway.amazonaws.com"

source_arn = "${aws_apigatewayv2_api.lambda-api.execution_arn}/*/*/**"

}Deploy the Frontend

Our frontend consists of a simple app built with create-react-app. It resides in the frontend directory of our repository.

We will use AWS Amplify to deploy this app. Therefore, we need two resources in AWS:

- The AWS Amplify app itself

- The branch that we setup our deployment for. It’s

masterin our case.

resource "aws_amplify_app" "frontend" {

name = "frontend"

repository = "https://github.com/sebst/aws-tf-frontend-example"

access_token = "<YOUR ACCESS TOKEN>"

build_spec = <<-EOF

version: 0.1

frontend:

phases:

preBuild:

commands:

- npm install

build:

commands:

- npm run build

artifacts:

baseDirectory: build

files:

- '**/*'

cache:

paths:

- node_modules/**/*

EOF

enable_auto_branch_creation = true

custom_rule {

source = "/<*>"

status = "404"

target = "/index.html"

}

environment_variables = {

ENV = "test"

REACT_APP_ABC = "${aws_apigatewayv2_api.lambda-api.api_endpoint}"

}

}

resource "aws_amplify_branch" "master" {

app_id = aws_amplify_app.frontend.id

branch_name = "master"

framework = "React"

stage = "PRODUCTION"

}Add the DynamoDB database

DynamoDB is a proprietary NoSQL database in the AWS universe. It uses tables to store its data, although these tables are non-relational and don’t follow a predefined schema. It is thus possible to add arbitrary attributes to items in this table. However, the hash_key has to be provided. This is the equivalent to the primary key in a relational data base.

resource "aws_dynamodb_table" "persistence-example" {

name = "persistence-example"

billing_mode = "PROVISIONED"

read_capacity = 20

write_capacity = 20

hash_key = "ID"

attribute {

name = "ID"

type = "S"

}

}Enabling Write Access to DynamoDB in our lambda-iam role

One last step is to attach an Allow policy for DynamoDB actions to our lambda-iam role. This enables secure, password-less access to the DynamoDB we created in the previous step.

We limit the access to the persistence-example DynamoDB table.

If you want to learn more about IAM, roles, and policies, head over to my introductory article on AWS IAM.

resource "aws_iam_role_policy" "dynamodb_lambda_policy" {

name = "lambda-dynamodb-policy"

role = aws_iam_role.lambda-iam.id

policy = jsonencode({

"Version" : "2012-10-17",

"Statement" : [

{

"Effect" : "Allow",

"Action" : ["dynamodb:*"],

"Resource" : "${aws_dynamodb_table.persistence-example.arn}"

}

]

})

}Putting it all together

You can download the project from GitHub.

Clone the repository with

git clone git@github.com:codewithbas/aws-tf-example.git

cd aws-tf-exampleOnce you adapted your security secrets inside the main.tf file, you can use Terraform to plan and apply your architecture to AWS:

terraform planterraform applyChecking in you console

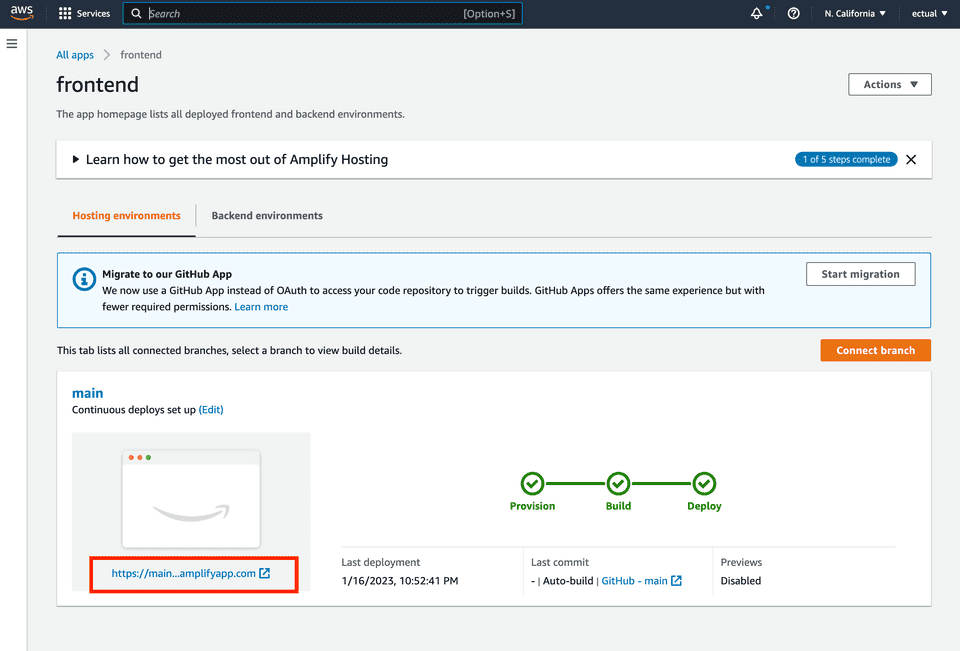

In your AWS console, head over to AWS Amplify to see your frontend app. You will see the build process triggered from the GitHub repository as well as the URL (the red rectangle in the screenshot).

Of course, it’d be possible to assign a custom domain name along with proper SSL/TLS certificates to your AWS deploy, but I leave that for a later article.

Cleaning up

In order to not waste any resources that might lead to unintended cost increases, make sure you delete this example project from your AWS account with

terraform destroy