Using Pulumi to Automatically Benchmark Cloud Providers

In this article, we will use Pulumi to set up virtual computers in the cloud and compare their performance.

By the end of the article, you will know

- how to create multiple cloud instances with the Pulumi Automation API with Python

- how to use cloud-init to provision those instances

- how to use yabs.sh to create reproducible benchmarks and extract performance data

- how to plot the data using matplotlib and Python

Ever wondered which cloud provider gives you the best bang for the buck when it comes to virtual machines? You’ve probably seen my December 2023 benchmark with some unexpected results, especially in the lower bracket of machine prices. In this benchmark, Linode, and Hetzner stood out with a great performance for low prices.

You may want to conduct your own benchmark on the cloud providers of your choice. Here’s how to do that with Pulumi.

In the example, we cover Pulumi programs for these three cloud providers:

The benchmark script we use is yabs.

You can find the project files on GitHub.

What is Pulumi

Pulumi is a infrastructure as code (IaC) tool that enables developers to manage cloud resources using familiar programming languages such as Python, JavaScript, TypeScript, and Go. Unlike traditional IaC tools, such like Terraform, Pulumi uses programming languages instead of static configuration files to manage infrastructure across multiple cloud providers.

For a quick start, you can use Pulumi locally – without their cloud offering.

Getting started

To get started, we need to setup a Python project. Don’t worry, the GitHub repository contains a DevContainer configuration get you started quickly in VSCode.

For the examples to run, you need Accounts with all the providers covered, and the appropriate API Tokens. Export the tokens by the following commands:

export HCLOUD_TOKEN=xxxxxxxxxxxx

export LINODE_TOKEN=xxxxxxxxxxxx

export DIGITALOCEAN_TOKEN="dop_v1_xxxxxxxxxxxx"Pulumi is designed to run on their cloud offering to enable state sharing within a team. You can, however, try Pulumi locally. To do so, set the environment variables, and login to your local workspace:

export PULUMI_CONFIG_PASSPHRASE=

export PULUMI_CONFIG_PASSPHRASE_FILE=

export PULUMI_ACCESS_TOKEN=

pulumi login --localWithout further ado, you should now be able to run the Python file run_benchmark.py – and have three machines provisioned on each provider, and get a nice plot of the FIO results.

How does it work?

We create a configuration for each provider – Linode, Hetzner, and DigitalOcean in our case. The files look pretty similar and follow the data structures of the pulumi driver for each provider. You can find more pulumi drivers in the Pulumi Registry. This is also the place where these drivers are documented.

A Pulumi Program for each provider

As an example, this is how the pulumi program for DigitalOcean looks like:

def run():

# [...]

# Create a droplet

server = pulumi_digitalocean.Droplet(

"benchmark-instance-digitalocean",

image=image,

region=region,

size=size,

ssh_keys=[ssh_key.fingerprint],

user_data=cloud_init,

opts=pulumi.ResourceOptions(depends_on=[ssh_key]),

)

# Export the IP address of the server

pulumi.export("ip", server.ipv4_address)

pulumi.export("ssh_key_private", private_key.decode("utf-8"))

pulumi.export("ssh_key_public", public_key.decode("utf-8"))Pulumi epitomizes the essence of “Infrastructure as Code” by defining resources through code, leveraging the robustness of the Python programming language. This amalgamation grants unparalleled customization capabilities to Pulumi programs. Yet, with this dynamic approach comes a slight increase in susceptibility to bugs compared to traditional IaC tools utilizing static config files. However, Pulumi mitigates this risk through its inherent dependency management system, ensuring seamless orchestration of resources like provisioning a “droplet” only after the prerequisite ssh_key is in place.

Provisioning using cloud-init

cloud-init is a widely used initialization system for cloud instances, enabling seamless configuration and customization during the boot process. It is available on most cloud providers. We use it to provide an initial configuration for the instances on which our benchmark runs. This includes installation of curl as well as the provisioning of a wrapper for the benchmark script itself in /root/benchmark.sh:

#cloud-config

write_files:

- path: /root/benchmark.sh

owner: root:root

permissions: '0744'

content: |

#!/usr/bin/env sh

curl -sL yabs.sh | bash -s -- -j -g

apt:

conf: |

APT::Install-Recommends "0";

APT::Install-Suggests "0";

APT::Get::Assume-Yes "true";

Debug::Acquire::http "true";

Debug::Acquire::https "true";

Debug::pkgAcquire::Worker "1";

package_update: true

package_upgrade: true

packages:

- ca-certificates

- curlWe add this yaml file to our pulumi program. Providers that support this feature usually use user_data, metadata or similar to recognize the cloud-init files. See the Pulumi documentation on each provider for reference. In the example above (targeting DigitalOcean), the cloud-init file is given by:

# [...]

user_data=cloud_init,

# [...]Running the script and collecting the results

In the setup function of each cloud provider’s module, we create the stack in pulumi, get the ip and the SSH key from the outputs, then use the paramiko Python package to run the benchmark script via SSH.

Data is collected in JSON format from stdout.

# [...]

stack = pulumi.automation.create_or_select_stack(

stack_name=f"benchmark-{provider}",

project_name="pulumi-benchmark",

program=pulumi_program,

)

stack.up()

outputs = stack.outputs()

# [...]

data = None

try:

# Run /root/benchmark.sh via ssh

# [...]

ssh.connect(ip, username="root", pkey=pkey)

stdin, stdout, stderr = ssh.exec_command("bash /root/benchmark.sh")

for line in stdout:

try:

data = json.loads(line)

except:

pass

stdin.close()

ssh.close()

print(">>>>>>>", "benchmark done. ")

# [...]

DATA[provider] = data

return dataPlotting The Results

In the main method of run_benchmark.py, we simply call the show_plots function located in plot.py.

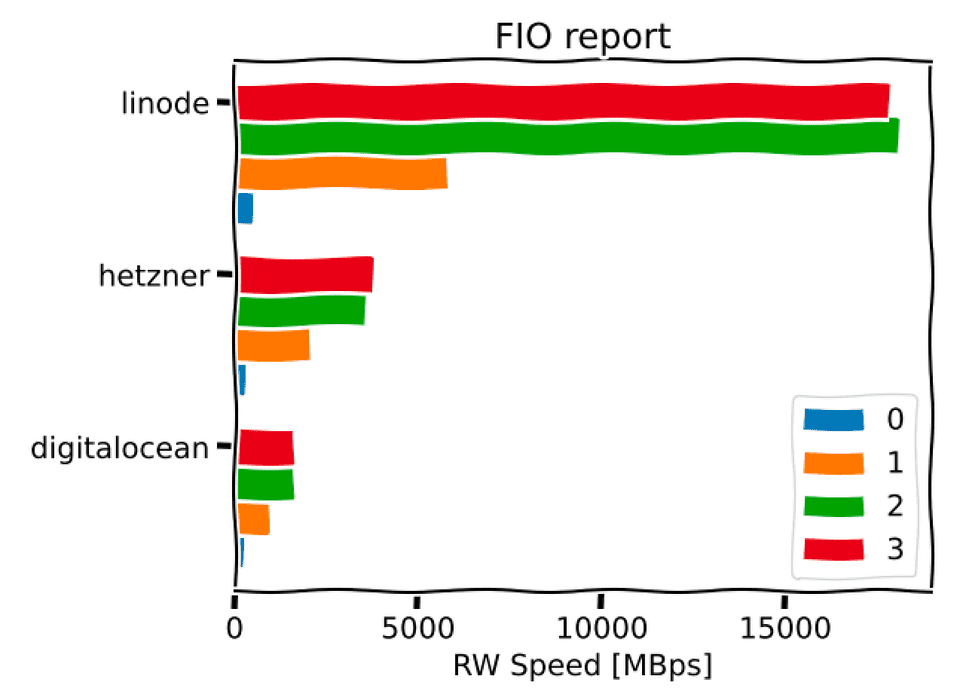

It simply grabs the output of the fio section of yabs’s JSON output, vonverts all numbers to MBps using the pint library for Python and puts all of this in a nice graph using matplotlib.

Putting it all together

export HCLOUD_TOKEN=xxxxxxxxxxxx

export LINODE_TOKEN=xxxxxxxxxxxx

export DIGITALOCEAN_TOKEN="dop_v1_xxxxxxxxxxxx"

export PULUMI_CONFIG_PASSPHRASE=

export PULUMI_CONFIG_PASSPHRASE_FILE=

export PULUMI_ACCESS_TOKEN=

pulumi login --local

python3 -m pip install -r requirements.txt

python3 run_benchmark.pyYou can find the project files on GitHub.