Webscraping with Python

Fortunately, in most cases, we can leverage public or paid data sources and perform our automation tests with ease. However, some APIs may not be readily available or may come at an unreasonable price point. This is where Python can prove to be very useful in scraping data from scratch. Python can mimic a browser’s behavior to parse HTML and extract the desired data.

Use Cases

There are numerous use cases for web scraping, including:

- Parsing for new job listings on company or job sites

- Monitoring price changes of competitors (or favorite products)

- Scraping contact information from company websites

- Ingesting databases

It’s worth noting that not all of these use cases are legal, and some websites explicitly prohibit the use of scraping mechanisms for automated data processing.

Prevention

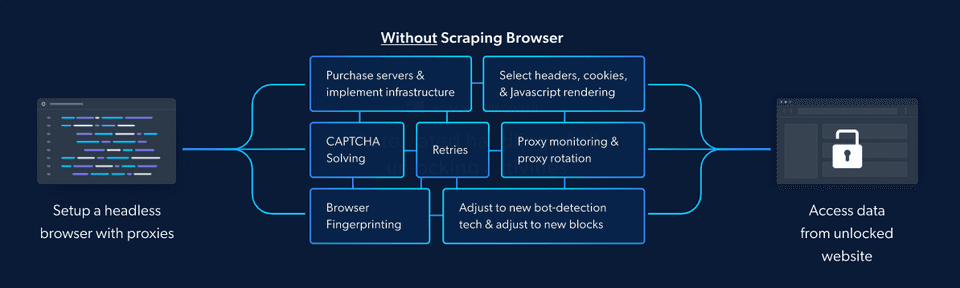

Website operators are well aware of the fact that people may try to scrape their data despite their efforts to prevent it. To deter automated data collection and spam, they put up safeguards like CAPTCHAs, IP range blocking, or User-Agent blocking. Some sites may even detect unusual behavior, such as accessing HTML without downloading CSS or image files. Solution There’s a significant market for enabling access to sites that limit programmatic access, and BrightData is one such solution. I had the opportunity to explore their API and was impressed by how many security measures they could circumvent. BrightData offers a proxy solution that allows scraping sites that would otherwise be blocked, making it an excellent option for data collection in challenging scenarios.

Solution

There’s a significant market for enabling access to sites that limit programmatic access, and BrightData is one such solution. I had the opportunity to explore their API and was impressed by how many security measures they could circumvent. BrightData offers a proxy solution that allows scraping sites that would otherwise be blocked, making it an excellent option for data collection in challenging scenarios.

Example Project

I’ve build an example project that scrapes a site for links to social media inside their content as well as in their meta tags:

Let’s have a look:

import tomllib

import requests

from bs4 import BeautifulSoup

from scrapy.selector import Selector

from lxml import html as lxhtml

from brightdata import proxy_servers

def get_social_xpath(filename="socials.toml"):

socials = list()

with open(filename, "rb") as f:

data = tomllib.load(f)

for name, item in data.items():

socials.append({"name": name, **item})

s = [f"contains(@href, '{site.get('domain')}')" for site in socials]

s = " or ".join([f"contains(@href, '{site.get('domain')}')" for site in socials])

s = f".//a[{s}]"

return s

def get_html(url):

response = requests.get(url, proxies=proxy_servers)

assert response.status_code == 200

html = response.text

return html

def meta_info(url, soup=None):

if not soup:

html = get_html(url)

soup = BeautifulSoup(html, features="lxml")

tree = lxhtml.fromstring(html)

…

social_paths = tree.xpath(get_social_xpath())

for leaf in social_paths:

href = leaf.attrib.get("href")

yield "body", href

if __name__ == "__main__":

from pprint import pprint

print(list(meta_info("https://bas.bio")))As you see, all data is routed through a proxy as defined in “brightdata.py” and it just uses the lxml package as usual. It’s output will be

[('meta-twitter-site', '@bascodes'), ('icons', '/favicon-32x32.png?v=53aa06cf17e4239d0dba6ffd09854e02'), ('body', 'https://github.com/codewithbas'), ('body', 'https://twitter.com/bascodes'), ('body', 'https://www.linkedin.com/in/bascodes/'), ('body', 'https://youtube.com/@bascodes')]Behind the Scenes

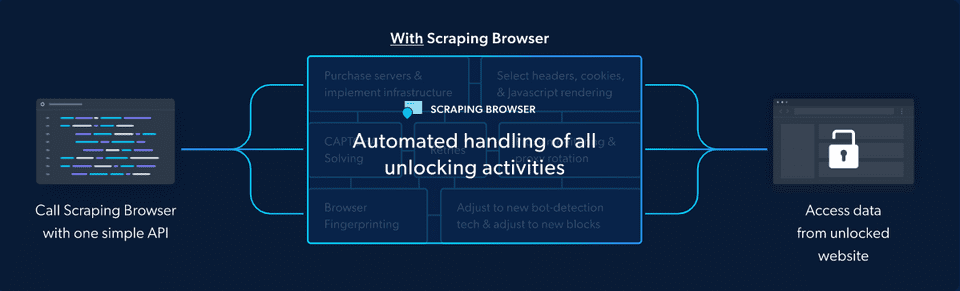

The Solution Bright Data’s Scraping Browser

Bright Data’s Scraping Browser simplifies and streamlines the web scraping process by offering a comprehensive solution to the challenges mentioned above. It provides developers with a powerful tool that can seamlessly extract data from websites without the need for complex configurations.

Key Benefits of the Scraping Browser API

- Easy integration: The Scraping Browser API is designed for easy integration with your existing web scraping projects, streamlining the setup process and getting you up and running quickly.

- Puppeteer and Playwright compatibility: The API is compatible with both Puppeteer and Playwright, two popular browser automation libraries, giving you the flexibility to use the tools you’re already familiar with.

- Advanced functionality: The Scraping Browser API offers advanced features such as automatic captcha handling, user agent rotation, and proxy management, making it a powerful and comprehensive solution for web scraping.

- Handles dynamic content: The API can efficiently extract data from websites with dynamic content, such as those using AJAX or JavaScript, enabling you to scrape complex web pages that traditional web scraping methods might struggle with.

- Resource management: The Scraping Browser API manages resources such as proxies and user agents, allowing you to focus on the actual data extraction and analysis, rather than spending time and effort on resource management tasks.

You can try it out here: https://get.brightdata.com/scrapingbrowser_bascodes

GitHub:

https://github.com/sebst/brightdata-scraper

💸 Note: This blog post contains sponsored content. See my policy on sponsored content