Kubernetes for Side Projects?

“You might not need Kubernetes; you’re not Google” – I hear that quite often. And while it is true that the full power of Kubernetes is not needed for smaller-scale web projects, it can come in quite handy.

In this article, I will showcase the benefits of using Kubernetes for smaller projects, and we will set up a Managed Cluster on the Digital Ocean platform.

My personal setup consists of just a few YAML files that create a deployment in my managed Kubernetes cluster, an ingress in the nginx-controller, and a letsencrypt certificate. Of course, I could use services like Heroku, Azure Functions, or other Platform as a Service providers but the fire and forget nature of my Kubernetes cluster is just appealing – I just create a Dockerfile, upload it to a container registry and have it automatically deployed. When I want to switch my cloud provider, all I need is one with a Managed Kubernetes service and a quick basic setup as outlined below. Even better: I can scale it whenever I need.

Benefits

- Reduce vendor lock-in. serverless vs Docker containers

- VSCode Docker containers straight to production

- Once set up, almost no maintenance is needed

- Scalability is just one config file away

- Learn Kubernetes along the way

What we’ll do

By the end of this article, you will have

- a Kubernetes instance on Digital Ocean (or any other cloud provider)

- a basic setup to fire up as many namespaced services as you want

- a basic understanding of how Kubernetes works

Let’s start: Set up a Digital Ocean account

In this article, I will use the Digital Ocean Managed Kubernetes Service. You can use any other cloud provider that offers this kind of service, too. If you don’t have a Digital Ocean account yet, you can use my promo link (https://bas.surf/do) to create one – You’ll get $200 in free credits for two months. So you can get started for free!

Prerequisites: You need to have kubectl and helm installed. If that’s not the case, please have a look here:

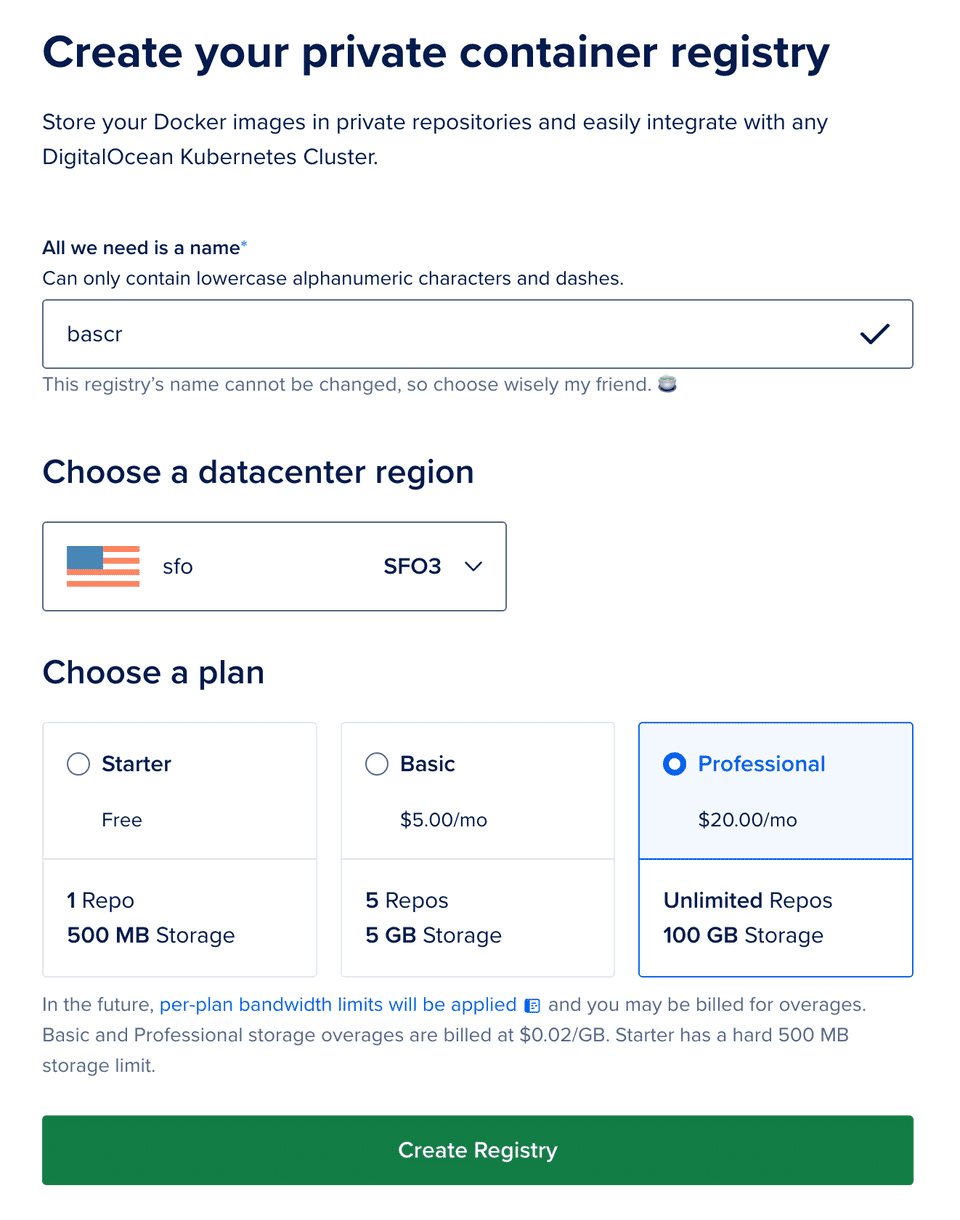

Create a Container Registry

First, you will need to create a container registry from your Digital Ocean dashboard. It should look like this:

The container registry is a private repository where we will store our Docker containers.

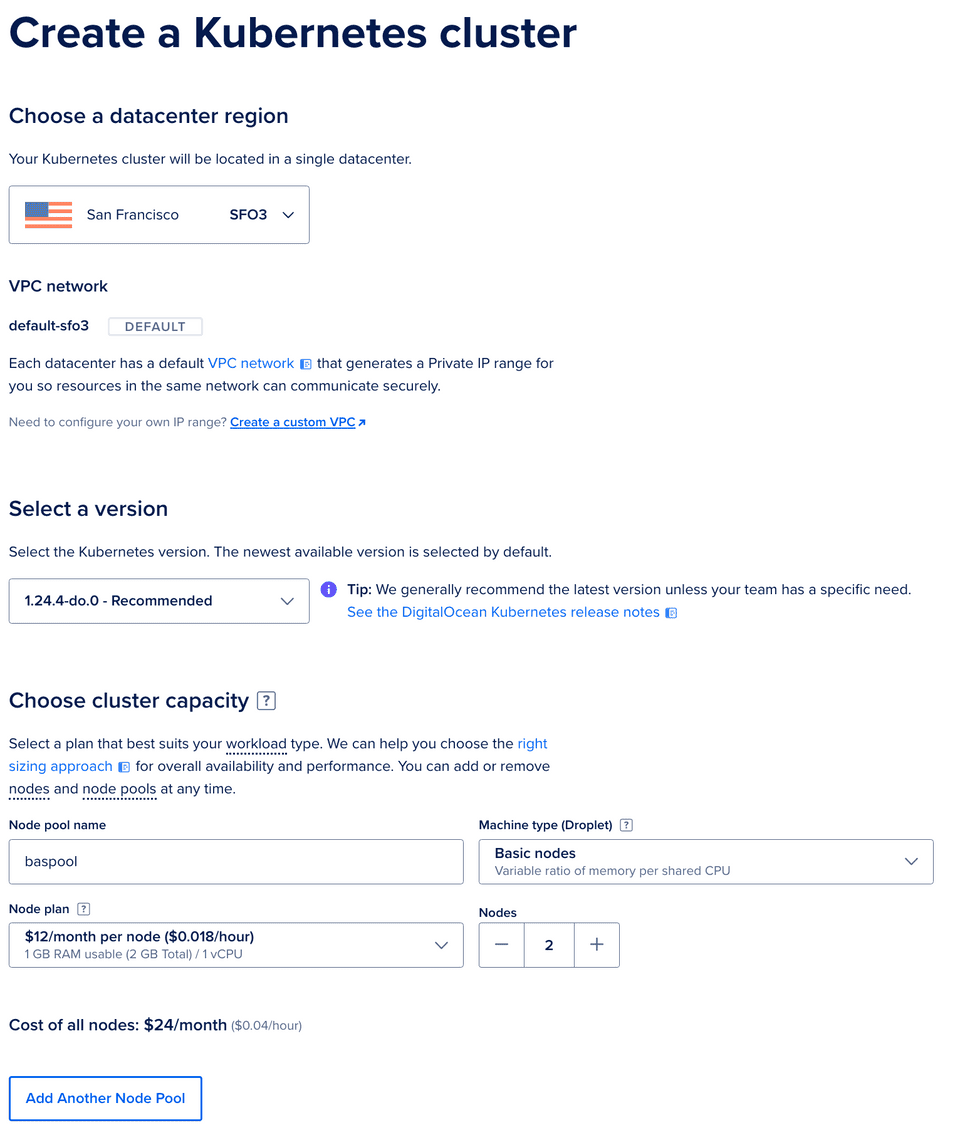

Create a Kubernetes Cluster

Next, create a Managed Kubernetes Cluster. It should look like this.

Remember to select the same region as your container registry. This way, things will just run faster.

Install doctl

If you’re on a Mac and have homebrew installed, you can install the Digital Ocean Command Line Tools with just

brew install doctlIn any other case, please refer to the official documentation

Connect kubectl to your cluster

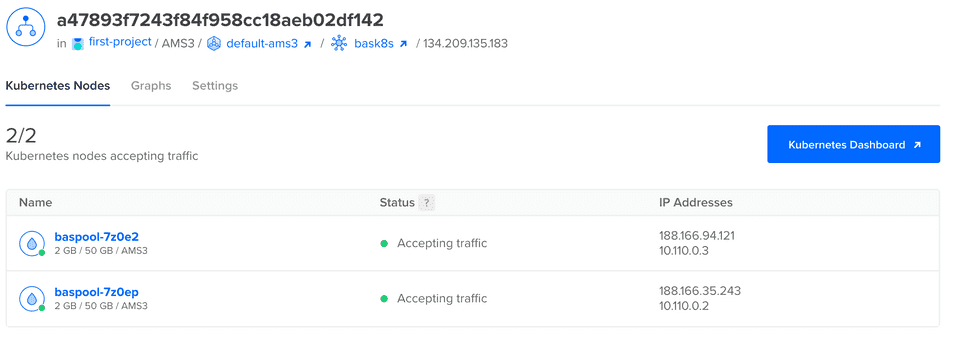

Now, we need to connect your Kubernetes cluster to your kubectl on your laptop. The correct ID can be retrieved from within your Digital Ocean dashboard.

Then, run:

doctl Kubernetes cluster kubeconfig save <CLUSTER-ID>Connect the Container Registry to Your Cluster

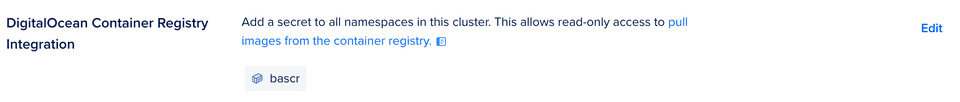

Next, we must ensure that your Container registry is accessible from the Kubernetes Cluster. This can be done with a few clicks in the dashboard and looks like this:

Connect the Container Registry to Your Laptop

And, the last step, is to connect the container registry to your laptop. You can do this by executing:

doctl registry login

Add the ingress-nginx helm package

Install the ingress-nginx helm chart with:

helm repo add ingress-Nginx https://kubernetes.github.io/ingress-nginx

Install the Nginx-ingress

Now, add the nginx-ingress to your cluster:

helm install nginx-ingress ingress-nginx/ingress-nginx --set controller.publishService.enabled=true

Install the cert-manager

Finally, install the cert-manager in your cluster:

kubectl apply -f https://github.com/cert-manager/cert-manager/releases/download/v1.10.0/cert-manager.yaml

Get IP of Load Balancer

Find out the IP of your cluster by clicking on the Load Balancer in the resources tab of the Digital Ocean dashboard:

Add DNS records to your domain

Depending on your domain registrar / DNS provider you can now add the IP of your Load Balancer to your DNS records. I will use hellok8s.bas.codes for this example.

Ready to go!

Your Kubernetes cluster is already set up correctly. How do we add a running app to that cluster?

I’ve created a very simple Flask app for demo purposes on GitHub.

clone the repository and start deploying

All we need now is an effortless demo project. I have created on GitHub: codewithbas/hellok8s.

You can clone the project as usual with the command

git clone git@github.com:codewithbas/hellok8s.git

Let’s get through the project files:

Dockerfile and flaskapp.py

The Dockerfile is very straightforward. It just installs the requirements (flask) and starts a flask app on port 5000.

docker.sh

docker.sh is just a convenient tool to create the Docker image, tag it accordingly and push it to our fresh Digital Ocean Container registry. Make sure you change the registry accordingly:

time docker build -t hellok8s:latest .

docker tag hellok8s:latest registry.digital ocean.com/<YOUR-CR-NAME>/hellok8s:latest

docker push registry.digital ocean.com/<YOUR-CR-NAME>/hellok8s:latestk8s/*.yml

Now, the interesting part starts! Let’s deploy this app to our Kubernetes cluster.

You can apply all files by typing

kubectl apply -f k8s/

Here is what happens behind the scenes:

Creating a namespace

Each side project we are going to host on our Kubernetes cluster can be isolated in a namespace. In 01-namespace.yml, we will create a namespace called hellok8s for our simple flask app.

Creating an Issuer for Letsencrypt

We do want to have automatic TLS encryption, don’t we? That’s why we create an issuer in our cluster. The issuer takes care of storing the certificate inside Kubernetes secrets and doing what is needed to solve the ACME challenges by letsencrypt. All we need to do is change the email address in 02-certificate.yml. This email address is used by letsencrypt to send you important notifications about your certificate.

Creating a deployment

The deployment is the part of the whole setup where the magic happens: The Docker container is pulled from our container registry, and it runs on our pool nodes once set up. For our example, we just used one replica of our deployment. If you want (or need) to scale up the application, you can already set any higher number of replicas here in 03-deployment.yml.

Creating a service

The service defined in 04-service.yml will now create a NodePort. It will make the deployment’s exposed port (5000) available to Kubernetes on port 80.

Creating an ingress

Finally, we need to connect our nginx-ingress to the service we just created (and thus, to the deployment running our Docker container). In 05-ingress.yml we define the HTTP rules to hook it all up.

Remember to change the hostname in that file to your own domain.

Let’s try it

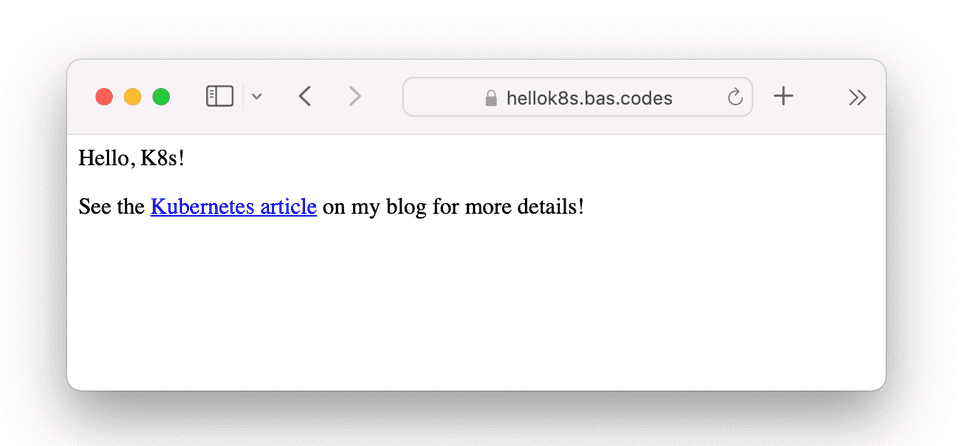

And, tada: We see our web app up and running:

What else?

Not only do we now have a solution to host applications packed in any Docker containers quite comfortably, but we also could add more features:

- Storage: If you need persistent storage, you can use StorageClaims to do so.

- Databases: Using persistent storage claims, it becomes easy to spin up database servers inside your Kubernetes cluster

- Cron Jobs: Run Docker images periodically

These topics will be covered in future blog posts.